![]()

Next:CommunicationUp:Info:Info

Society Previous:Microelectronics

Objective: Discuss the economic incentives driving the evolution of hardware and software and relate this progress to Moore's law. The index for this section is:

Let us start with two basic definitions which may be obvious to most of you. Hardware refers to the physical computer, communication or control equipment. Software refers to the operating system and programs which run on the physical equipment.

Leibnitz conceptualized the concept of a computer in the 17th century. In the 19th century, Babbage made advances in mechanical computers. By World War II, warships had mechanical computers in the form of fire control systems. The first digital computer was built at Iowa State slightly before WWII. After World War II, the Americans built a digital computer using vacuum tubes for military ballistic studies. Like many post WWII technologies, computers were originally created for military purposes; however, the real advances did not materialize until they were applied to business problems thereby creating a large demand, which promoted advances through economic competition.

Advances in computers are made possible by advances in microelectronics. This is because (1) mechanical computers are far too large and too inaccurate, and (2) vacuum tube computers consume a great deal of power and break down frequently. What made the computer a commercial success was the invention of an inexpensive, reliable transistor, which required very little power. At first, transistors in computers were individually wired components. Technological advance led to boards with individual components, and finally to boards with integrated circuits. The advance in microelectronics has made the digital computer dominant over other types of computers because of its lower cost and higher performance.

The original computer developed by UNIVAC was funded by the military to solve problems such as the trajectories of shells. Sperry thought the demand was only about 4 to be used for trajectory studies and so did not push the marketing of computers. IBM saw the business possibilities of computers and developed its reputation not so much by product innovation, but by service and support. The computer market developed in the Fortune 500 companies and has progressively moved to smaller and smaller companies. Currently, the computer is entering the smallest of businesses. The computer is now even entering the home as a mass market item. The economics of the expansion are simple: as the market expands, the manufacturing costs fall which makes the computer a useful device to a larger and larger market and promotes further software development which in turn fuels the expansion by providing more application software to run on cheaper machines.

Today there is a vast array of different sized computers from small personal computers able to process hundreds of millions of instructions per second to giant supercomputers which can process trillions of instructions per second. A heuristic hierarchy might be personal computers, workstations, minicomputers, mainframes and supercomputers. In addition, special purpose computers act as control devices for numerous industrial activities such as chemical plants and communication exchanges. In new cars, a microprocessor controls the combustion process.

Because of Moore's Law concerning the increase in the number of electronic components on a chip, each new generation of computer is much more powerful than the last. When a new computer is designed, it is designed with an existing microprocessor. After six months to a year, the computer comes to market. To sell the computer the manufacturer makes sure that the computer is backwards compatible which means that it can run all the previous software for previous company machines. For example, 80486 personal computers can run all 80386 software, and likewise the Pentium chip was designed to run both 80486 and 80386 software. After several years the software industry catches up and creates software which explicitly uses the power of the new machine. For example, the new operating systems for the PC clone world are just now taking advantage of the power of the 80386 chip. In the meantime new PC machines have been developed on the next generation of microprocessor, Intel's Pentium, Pentium II and Pentium III series processors. Again there will be a lag of several years before software is developed which fully exploits the capabilities of the new machine.

Apple took a different approach to ensure backward compatibility between the 6800xx chips and the PowerPC RISC chip computers. Apple created a software emulator to run 6800xx software on the newer PowerPC computers. Usually emulators are too slow to be useful, but in Apple's case they were able to develop an efficient emulator.

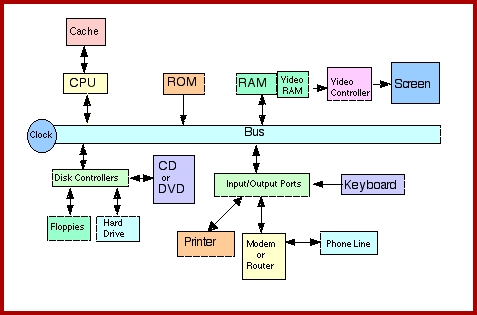

Most computers today are the digital computers based on the Von Neumann design principle-(1) a single central processor; (2) a single path between the central processor and memory; (3) program is stored in memory; and (4) central processor fetches, decodes, and executes the stored instructions of the program sequentially. Almost all personal computers, workstations, minicomputers, and mainframes are currently Von Neumann design computers. To understand how such a computer works consider the following schematic drawing:

The various components of a computer are:

a. CPU: The central processor unit is the `brains' of a computer that contains the circuits that decode and execute instructions. Because of Moore's law, the number of integrated circuits necessary to create a CPU correspondingly decreased until, in 1975, the CPU for a personal computer could be designed on a single integrated circuit called a microprocessor. Since that time, each generation of microprocessors is more powerful than the last. Two types of microprocessors are CISC and RISC. Simply put, the instructions for the first type are much more complex than for the latter. Examples of CISC are the Intel 80x86 and Motorola 680x0 series microprocessors. Apple switched to RISC with the introduction of the PowerPC. To do this they had to create an emulator to run previous software. However, Intel is incorporating most of the features of RISC chips in the next generations of its CISC chips. RISC chips are more powerful and are found in workstations such as those from SUN. Besides a single CPU, a Von Neumann design might have several coprocessors-that are subordinates processors for arithmetic and fast video displays. The latest advance is to put to cores (processors) on a single microprocessor chip.

CPU

b. Memory Pyramid : As CPUs have become faster and more powerful, a fundamental bottleneck in computer design is the flow of information back and forth from memory to the CPU. As computing of videos becomes more important, the need to move large amounts of data quickly from storage to the CPU grows accordingly.

Currently, there are now many types of memory devices with different costs, access times and storage capacities. Generally the faster the access time, the more expensive the memory. Consequently, good design is based on a memory pyramid for providing increasing amounts of less costly, slower memory.

(1) Cache memory: This generally is memory placed on the CPU or is attached with a special fast bus. It is the fastest and most expensive, so little is used.

(2). RAM(DRAM and SRAM) and ROM: These are various types of memory chips directly accessible to the computer. The two most important types of random access memory, RAM, are dynamic(DRAM) and static(SRAM). The advantage of the latter is that memory is retained when you turn off the computer. Read only memory, ROM, is a special type of memory for reading but not writing. This type of memory is useful for storing frequently used software, for which the user needs rapid access but has no need to modify. Memory chips are more expensive and faster than the various types of magnetic and laser disk memories.

As the cost of a bit of integrated circuit storage has been decreasing by about 30-35%each year, it is not surprising that each new generation of computers has much greater RAM storage than the previous generation. For example, the APPLE II had 48K and was expandable to 64K; the MAC started at 128K and now is rarely purchased with less the 64M of RAM. (K stands for one thousand and M stands for one million.). Similarly, a buyer would currently purchase a PC with as much as 128M of memory.

(3). Magnetic Disk: These are magnetic disks of various types, such as floppies (5 1/4 and 3 1/2) and hard disk. These devices are cheaper and can store much greater amounts of data than RAMs, but have slower access time, which is the amount of time it takes the computer to read information from the magnetic disk into RAM. Over time technological advances make it possible to store increasing amounts of information per square inch of disk space. For example, firms are now selling floppy 3 1/2" disk drives with a capacity of 100M per diskette. Generally a computer has a much bigger hard disk memory than the memory on integrated circuits. Also, over time computers have increasing amounts of magnetic disk space. The advances in magnetic disk in terms of capacity and cost is analogous to Moore's Law

(4). Bubble memory: This is an expensive, magnetic type of memory device which currently has not achieved its potential. Bubble memory has the potential to store the information contained in the Library of Congress on a device the size of a 1/4 inch cube. This type of memory has some applications in portable computers.

(5). Laser Disk: A new type of memory device is the laser disk that can store very large amounts of data. This type of memory device is beginning to be used in library applications. Cheaper, but much slower than hard disks, CD-ROM refers to read only laser disks. With the advance of laser dish technology CD-ROM technology is now being replaced with DVD technology. DVD disks are the same size as CD-ROM disks so that a DVD player can play CD-ROMs. The advantage of DVD technology is the increased storage capacity so that DVDs can plan a feature length movie. DVDs will probably gradually replace VHS tapes like the ones rented from BlockBuster. There is a battle over standards for DVDs that may not be resolved until 2007 or so.

Laser Disk

(6). Holograph memory: This is a new type of memory based on holograph patterns which is just now entering the market. The commercial potential for this type of memory device is very large because it is fast and can store a prodigious amount of information. Thus, this type of memory will be very useful in multimedia computers because the processing power of microprocessors has advanced much faster than the ability to move large amounts of data required for dynamic images from memory to the CPU.

Holographic Memory

c. Input/Output Devices

Keyboard, mouse and video screen

Printers: Dot matrix impact, Inkjet and Laser

Modem to phone: You can connect your computer to a network over analoy phone with an effective capacity of about 40K bits/sec, over an DSL line with a capacity of 1.5M bits/ second or cable modem that is higher than DSL. US capacity is way behind over countries such as Japan where connection speed is about 20M bits/second.

Currently a great R&D effort is being made on pen input devices which can read handwriting, such as the Palm Pilot. This is a problem in pattern recognition. Also, voice input devices are now becoming a part of PCs. Again, this is a serious problem in pattern recognition.

Handwriting recognition software

d. Bus: The bus is the electric circuit connecting the components of the computer together. The more powerful the computer, the bigger the bus, where bus size refers to the number of bits which can be communicated at one time. The trend in personal computers has moved from 8 to 16 to 32 and is moving to 64 bits buses currently. As we move towards multimedia, the need to move large blocks of data very quickly will increase.

e. Clock: The clock synchronizes the components of a computer to work together. A faster clock enables the computer to execute more instructions a second. Since the 1970s, clocks on personal computers have increased from 1 to over 400 million cycles a second. The faster the clock the more heat is generated and the more steps must be taken to keep the electronic devices cool. Personal computers currently have two clocks: one for the CPU and one for the bus with the CPU clock running up to 10 times faster than the bus clock. The bus clock speed must be increased as we move into processing dynamic images.

Von Neumann

A parallel computer may have more than one CPU controlled by a single operation system. Some problems are intrinsically sequential and there is no possible gain from having more than one CPU. Unlike a Von Neumann design where all designs are variations on the basic principles, a parallel computer has many alternative designs, each of which is best suited for a particular class of problems. Alternatives cover:

In the past parallel computers tend to be supercomputers at the limit of computational capabilities. An example is IBM's Deep Blue computer that beat the world champion in chess, Garry Kasparov in 1997. Again, this is more a matter of brute force in looking at very large numbers of alternatives than 'thinking" like a chess player. However, now consumers are able to purchase dual core computers from any computer manufacturer. The dual core processors provide an increase in overall performance, but have the greatest value under multi-tasking conditions.

A multi-core system is where several processors are placed on the central processing unit instead of having multiple CPUs. Currently the two main producers of multi-core systems for the consumer market are AMD and Intel. One concern to the manufacturers of multi-core systems is software licensing. Many software applications are not licensed by the computer but instead are licensed by the processor. For now, Microsoft has agreed to treat multi-core CPUs as single processor rather than as multiple processors. Multi-core parallel computing also has the advantage of being easier to integrate into the current PC manufacturing complex. This is because multi-core CPUs fit into the current sockets of motherboards, which means that there does not have to be a reorganization of the production method to provide parallel computing to the masses.

One early development in parallel processors was the development of supercomputers by Seymour Cray and Control Data that had a very small number of very powerful processors that had hardware for array operations, such as a vector dot product. Cray was bought out by SGI and then came back as a separate firm, Cray. These types of parallel processors are primarily used in scientific computing. Remember there is no limit to the power of a computer that weather forecasters can use. The more powerful the computer the finer the grid of the biosphere they will use in the computations. Parallel computers are also used as servers in situations where the server has to process a very large number of requests.

An alternative approach for business computing such as internet servers is using large numbers of PC like processors. Here there is an economic concept called scalable computing. Suppose a firm buys a computer and as demand on the computer increases the firm needs a bigger computer. The old approach would be to replace the current computer with a bigger more powerful computer. With parallel processing based on PC type processors the new approach is to expand the computer with the addition of additional clusters of PC type processors. Among the several firms taking this approach are HP and Sun. Server computers are increasingly tending to be parallel processors. The technical problem to overcome in scalable computing is how to effectively cluster the PC type processors. The master at building supercomputers is IBM.

Parallel Computers

Artificial Neural Networks, ANN

An artificial neural network, ANN is a software simulation of a biological neural network, brain, run on a conventional computer. Because it would be much faster to have integrated circuits specifically designed as ANN, firms are creating special purpose, ANN ICs such as Accurate Automation Corporation's NNP line

Some definitions of ANN are:

A diagram of a simple feedforward ANN is shown below:

Inputs are entered at the input layer. Note each element in the input layer is connected to each element of the hidden layer. Depending in the weights of the inputs a particular element of the hidden layer activates if there sum is greater than the activation threshold for that element. Each element in the hidden layer is connected to each element in the output layer. Again depending on the weights of the output from the hidden layer the elements of the output layer fire if the sum is greater than the activation threshold.

ANN are not programmed like conventional computers rather they are trained either with or without a teacher. In supervised learning the ANN is subjected to a large number of training sets of data and the weights and thresholds are adjusted to minimize the error in its predications of the training set data. Any type of optimization algorithm can be used to train an ANN and researchers have explored the alternatives from simple gradient procedures to genetic algorithms. The model of the process is the final weights and thresholds of the various elements. It is not an explicit set of mathematical equations. In unsupervised learning the ANN simple processes the data sets discovering patterns. There are numerous technical problems to overcome in obtaining good performance from an ANN.

As NeuroDynamX points out their ANNs have numerous application in:

"Neural networks often outperform traditional approaches at pattern recognition, classification, data clustering and modeling of nonlinear relationships. DynaMind® Developer Pro lets you access neural network technology for a variety of applications.

While an ANN is a massively parallel processor, the term parallel processor usually refers to digital computers with more than one CPU controlled by one operating system and programmed with specific instructions. Hence, an ANN can be simulated in a parallel processor.

Neural Network

Distributed Processing

Distributed processing is processing that takes place in a network of computers controlled by separate operating systems. Coordinating distributed processing is more complicated than parallel processing because of the different operating systems.

Originally corporations had standalone computers which were fed input from cards and magnetic tapes. Early evolution was towards more powerful mainframes. The next step were systems of terminals connected to the corporate mainframe.

The mainframe, generally IBM, with up to hundreds of dumb terminals was a big advance in that instead of having to submit cards to the mainframe each computer user could manage his or her jobs using keyboard input. Since the mainframe was a sequential computer it did not process all the input from the users simultaneously, but rather proceeded to devote a small time slot of the central processor resources to each job in sequence. The downside of this approach was that the operating system of the mainframe spent a considerable overhead in shifting from one job to another.

Then came the explosion of personal computers. At first personal computers were standalone units on employees' desks. Because these PCs were not linked to the corporate mainframe, employees had a difficult time obtaining corporate data for their work. This difficulty created incentives to link these personal computers into networks.

A rapidly growing type of computer network is a client-server

network, which is a network of clients, which are PCs, MAC or

workstations used by employees, connected to a server which furnishes

clients with such things as huge disk drives, databases, or connections

to a network. Servers can be mainframes, minicomputers, workstations,

or powerful PCs. More than one server can supply services to the

clients. In creating a client-server network, it is frequently

efficient to replace an expensive corporate mainframe with a much less

costly, powerful workstation. This is known as downsizing. A

client-server network is a distributed processing system because some

processing takes place at each node. There are many physical

organizations and two control mechanisms.

Physical organization

Bus - single backbone, terminated at both ends

Ring - connects 1 host to the next, and the last to the first (picture

below is ring organizaiton)

Star - connects all devices to a central point(Picture above of IBM

with dumb terminals is star organizaiton)

More advanced are the Extended Star,Hierarchical and Mesh -

interconnects all devices with all other devices

Control Mechanism

Token Passing - a single electronic “token” is passed around and

computers send data one at a time (only while possessing the token)Inefficient

Broadcast - each host sends data to all other hosts on the network on a

first come, first serve basis. More efficient (can not be used

on star organizaiton)

A simple type of client-server network where the clients are connected

in the digital analog of a party line former found in rural areas.

A client-server network has many advantages. If a client machine fails, the network remains operational. Such a network has tremendous computer power at a low cost because powerful workstations are as powerful as older mainframes at 1/10th cost. You can pick and choose hardware, software, and services from various vendors because these networks are open systems. Such systems can easily be expanded or modified to suit individual users and departments. The difficulties of such systems are that they are difficult to maintain, lack support tools, and require retraining the corporate programming staff.

Today the Internet is the largest distributed processing system in the world. A new development in distributed processing is grid computing where computations take place across a network where the individual computers are in different administrative domains.

Distributed Processing:

DNA Computer

An experimental computer type of computer that has the potential to

solve NP problems efficiently is a DNA computer. The first DNA computer

was created at University of Southern California by Len Adleman.

Earlier designs for DNA computers used ATP (a phosphate molecule that

provides energy for cell functions) to power the computer. A major

breakthrough occurred when Israeli scientists at the Weizmann Institute

used DNA as the computers energy source, software and hardware. This

allowed them to have 15,000 trillion DNA computers in the size of a

spoonful of liquid and it also allowed them to achieve over 1,000,000

times the energy efficiency of a normal PC. In terms of storage DNA

computers have enormous potential, since 1 cubic centimeter of DNA has

the potential to store over one trillion compact discs worth of data.

However currently the DNA computers are very elementary and are faced

with several limitations. These include only being able to answer

yes/no questions, and being unable to count. Their strengths are in

being able to solve many different task simultaneously rather then

solve one task very quickly, much like parallel computing On the

commercial front Olympus Optical has built the “first commercially

practical DNA computer that specialized in gene analysis.” They plan on

selling the abilities of this computer as a service to researchers. The

major developments of DNA computers are expected to be in computers

that will put in into one self, and be able to check for diseases and

cancers. In this regard, the Weizmann institute has developed a medical

kit capable of diagnosing cancers in a test tube, but they believe they

are still decades away from being able to deploy it in humans.

DNA Computer-References

Our interest in software is not that of a programmer, but rather an economist. What are the market forces operating on the evolution of software? We are concerned with the increasing capability of software as it moves from number crunching to multimedia applications on networks. We are also interested in the increased efficiency of programmers and how market forces tend to make software more `user friendly.'

Software Basics

We will consider three aspects of software:

Because of Moore's Law computers at all levels are becoming more powerful. This means:

More features:

Initially computers were number crunchers for science and accounting. Next software was created for text processing. Then as computers became more powerful, increasing amounts of software was created for graphics. Because man is a visual animal, the trend will be towards ever more powerful multimedia software. Personal computers are just now acquiring sufficient power to process dynamic visual images. Apple is now selling the iMac with a digital video cam, DVD and the software to edit videos. If you are into computer games, reflect for a moment on the increased dynamics and realism of the pictures.

In the future, virtual reality that might be described as a 3-D animated world created on a computer screen should become commonplace. The viewer wears a special glove and helmet with goggles which enables him or her to interact with the 3-D world. Virtual reality is great for computer games and has numerous business applications. For example, engineers can determine if parts fit together in virtual space. As the number of electronic components on an integrated circuit continues to increase, software will be created to edit videos on personal computers.

Software developers have powerful incentives in using the ever increasing power of computers to constantly create new software to perform services for users. One aspect of creating ever more powerful software is to continually add new features to software packages such as word processors. Another is to specialize software into niche markets such as specialized CAD programs for each industry. In addition, software programs are created for new human tasks. Operating systems become every more complicated in adding features for multitask management, security, and Internet integration.

More Features

More efficiency in program creation:

In order to compete, software companies constantly innovate to reduce the cost of creating software. Originally, programs for a computer had to be written in machine code, that is binary numbers for each operation. Because humans do not think in terms of strings of binary numbers, this meant the development of software was a slow, tedious affair. The first innovation in programming was assembly language which substituted a three letter code for the corresponding binary string, such as ADD for the binary string add. In the 50s computer scientists invented FORTRAN, which allowed engineers and scientists to write programs as equations, and COBOL, which allowed business programmers to write programs in business operations. In the 50s and 60s when memory was very expensive, the year was recorded with two digits. This was supposed to cause a major problem in the year 2000, and came to be known as the Y2K problem, when software that has not been upgraded will treat the year 2000 as the year 1900. Industry and government spent $Billions to correct the problem and the fiascos turned out to be minor.

Since that time, computer scientists have developed thousands of languages. The applications programmer generates statements in the language most suited for the application and a translator (an assembler, an interpreter, or a compiler) transforms the statements of the language into machine language for execution. One trend in languages is specialization, such as Lisp and Prolog for artificial intelligence. A second trend is to incorporate new concepts, such as structured programming in Pascal. Another trend is to incorporate more powerful statements in new languages. For example, being able to perform matrix operations in a single statement rather than write a routine to process each matrix element. Finally, effort is made to constantly improve the efficiency of the machine code created by translators.

Because it is much easier to automate the production of hardware than software, software development has become the bottleneck in the expansion of computation. One method of making programmers more efficient is to create libraries of subroutines. This means that rather than start from scratch with each program the programmer can write a code to employ the appropriate subroutines. Because there is a very large investment in FORTRAN and COBOL libraries and programs and new features are constantly being added, these languages were actively used until the 80s.

The current rage is the move towards Object Oriented Programming, OOP, which can be considered an innovation on the idea of writing code using libraries of subroutines. The new wrinkle is to expand the concept of a subroutine to include not only code but also data. The data-code modules in OOP have three basic properties: encapsulation, inheritance and polymorphism. Encapsulation means that each module is a self-contained entity. Inheritance means that if you create a module A from module B which contains a subset of the data in A, module A inherits all the code which runs on module B. Polymorphism means that general code can be applied to different objects, such as draw command to draw both a square and a circle simply from their definitions. A current example of an OOP language is C++.

From the perspective of economics, the concept of OOP is an example of the specialization of labor. OOP improves efficiency because highly skilled, creative programmers will create libraries of OOP modules. Users with some programming skills will then use these modules to easily create their application programs. More advanced is the development of toolkits for programmers which write standard software for routine operations. For example, sending text to the printer. With Visual Cafe, Symantec created a visual way to create the layout of the graphically interface that software created the Java code.

Error control in program development: Creating software is still a labor intensive effort and large systems such as Windows 2000 can involve thousands of programmers. What is needed for better software development is a systematic approach to quality control to reduce the number of errors. When Netscape and Microsoft competed with their browsers they had incentives to compete in the number of new features not so much in code that was bug free. Debugging was done in response to consumer complaints. In many instances this is entirely unacceptable. You can not debug an air traffic control program in response to aircraft crashes or debug a program to control a nuclear reactor in response to accidents that release radiation.

Improving programmer efficiency and reducing errors

User friendly:

Another very important software cost is the cost of learning how to use an application program. For example, how long do you have to send a secretary to school to use a new wordprocessor effectively. As computer memories grow and computers become faster, part of these increased memory and speed are used to develop operating systems and application programs which are much easier for the final user to learn how to use. While in large computer systems the trend has been to develop interactive operating systems so that many users can interact with the computer at the same time, the trend in personal computers has been to develop visual icon, mouse-driven operating systems. The great advantage of an icon based operating system is that it is intuitive to man, a visual animal. Moreover, Apple has insisted all software developers use the same desktop format in presenting programs. While such a strategy meant that a considerable portion of Macintosh resources were devoted to running the mouse-icon interface, Apple was successful with the Macintosh because the user does not have to spend hours over manuals to accomplish a simple task. With Windows the PC clone world is imitating Apple. Windows 95 and Windows NT are almost as easy to use as the MacOS.

In a similar vein, software developers constantly try to reduce the user's labor costs in using applications software. One example is the trend towards integrated software. At first, it was very time consuming and labor intensive to transfer information from one type of software program to another. The user had to print out the information from one program and input it to another. Today in packages called office suites, word processors are integrated with such programs as spreadsheets, drawing programs, and file programs to automatically transfer information from one type of program to another. The frontier in integration is creating software which facilitates group interaction in networks of computers. An example here is Lotus notes. Group integration software improves the productivity of work groups in their joint efforts. Thus, integration simultaneously makes software more powerful and easier to use.

Computers will never be common home items until they are much easier to use. The long term vision is to have computers which are controlled by English language operating systems and programs. This will require major advances in voice pattern recognition and in creating English type computer languages. This, in turn, may require the integration of neural network computers for pattern recognition with the current type digital computers.

User Friendly

Some issues:

Thin versus fat: The advance of the Internet as the network of client server networks raises an important question concerning the appropriate power and software for each entity in the network. With Microsoft Window development the operating system is complete with features that the average user seldom uses. Also, application programs are replete with features that the average user may seldom use. Sun's distributed processing model is with thin clients (little power and resources in the form of hard drives). These thin clients would need a simple operating system to run a WEB browser and the user would download only those component of software that he or she actually needed or the user might run the job remotely on a powerful server. How the Internet will evolve is an open question.

The development of every more increasingly powerful personal computers, operating systems, and programs has lead to a potential challenge to the current trend. For most purposes such as using the Internet, the user does not need a powerful PC or powerful operating system that has numerous features the typical user does not use. Again most software programs contain numerous features that the typical user does not use. The slang term is bloatware. SUN in its competition with Microsoft has proposed thin clients of much simpler personal computers that have a simple operating system and download only those components of software packages that they actual need. Obviously, SUN would like the language for this approach to be their very own JAVA. As economists, you should realize two important features of this conflict. First, given the fact that Microsoft controls 90 percent of the operating system market, it would require a massive investment to shift. Microsoft has shown remarkable agility to shifting direction when need be. They have an operating system Windows CE that probably could run thin clients. While there are economic desirable features of such a shift it may not happen any time soon. Secondly, for some tasks, a user wants as powerful a personal computer as is possible to make economically. One simple example is gaming. What this means economically is that we should expect increasing specialization in all sizes of personal computers and other digital devices such as Palm computers.

Artificial intelligence is the attempt to endow hardware and software with intelligence. This field started after World War II has had numerous successes, but it is important to separate hype from reality. Remember researchers in all fields have an incentive to oversell the economic potential of their field in order to obtain government funding. We will examine artificial intelligence from the perspective of economics. In viewing the field of artificial intelligence it is important to avoid extremes. For those of you who enjoy science fiction movies, you may incorrectly believe that humans have already created androids with human capabilities. At the other extreme are critics who claim that computers will never think. From the perspective of economics you need never ask the question of whether programs are capable of thought, but rather you need to ask how will increasingly capable software will affect economic behavior. It is a safe assumption that the capabilities of programs will advance much faster that the creation of intelligence by natural selection in animals.

The production function, q = f(K,L), specifies the combinations of labor, L, and capital, K, to produce output, q. Hardware and software are forms of capital. As the capabilities of software advance, economic incentives towards innovation mean that the new software is applied to the production process in a new combination of K and L. Generally, to be an innovation, the production process has to be completely reorganized and frequently the output is changed.

For software to provide an economic service, the software program need not contain any artificial intelligence whatsoever. For example, the software for an ATM machine enables the customer to select the desired service from a series of menus by pushing buttons. Let us consider how menu programs are affecting the travel agency business. With the advance of the Internet, the airline industry will make greater profits if customers buy their tickets online thus greatly reducing the labor costs. They have cut subsidies to travel agents so that many travel agents now must charge fees for their services. This greatly improves the market for Dot.Coms like Orbitz to sell airline tickets with the customers using menus. The software behind the science is more complicated, but hardly could be considered capable of thought.

Now let us consider the types of software created by artificial intelligence researchers and the types of problems they have made some headway in solving.

Expert or Knowledge-Based Systems: Obviously, the capability of software to provide economic services is enhanced by the incorporation of artificial intelligence. Let us first consider the advance of expert systems, which AI professionals prefer to call knowledge-based systems. These expert services in many cases are specialized information services which provide opinions or answers. The basis of an expert system is the knowledge base which is constructed by a knowledge engineer consulting with the expert. A rule of thumb is that if a problem takes less than twenty minutes for the expert to solve, it is not worth the effort, and if it takes over two hours, it is too complicated. The knowledge engineer attempts to reduce the expert's problem solving approach to a list of conditional (if) statements and rules. The expert program provides a search procedure to search through the knowledge base in order to solve a problem. A particular problem is solved by entering the facts of the case. The knowledge incorporated in most expert programs is empirical not theoretical knowledge. Thus, this approach works best on problems which are clearly focused. Expert programs have had some market successes since the 70s:

a. XCON of Digital: This expert program configures VAX computers for customers and makes fewer mistakes than humans.

b. Dipmeter advisor of Schlumberger: This expert program interprets readings from oil wells and performs as well as a junior geologist 90% of the time.

c. Prospector of the US Geological Service: This expert program found a major deposit worth $100M.

d. MYCIN of Stanford: This expert program can diagnose disorders of the blood better than a GP but not as well as an expert.

Before the mid 80s AI was a research activity in universities. With the advent of the first commercial successes of expert systems a new industry was created. The new industry oversold the possibility of expert systems, and sold firms software packages with the mistaken idea that the firms could easily create the knowledge base for their applications themselves. The result was a fiasco which discredited the new industry. Critics claim that the AI types are overreaching themselves because the computer limitations imply an expert program is unlikely to be more than just competent and can not deal with new situations. This is the reason the AI types prefer to call expert systems knowledge-based systems. Such systems in practice act as intelligent assistants, not experts. They change the composition of work groups by replacing assistants and offer the possibility of new services. For example, knowledge-based accounting systems reduce the need for junior accountants and enables accounting groups to answer what-if type questions for their clients.

From an economic prospective, if competency via an expert program is cheaper than competency via training humans, then the expert program industry will continue to grow. Once you have created an expert program, the cost of creating an additional copy is very low. The biggest success in expert systems is in the area of equipment maintenance programs. The use of expert systems continues to grow and an industry has developed to supply code and consulting services to implement expert systems.

Some advances:

Case-based Reasoning, CBR, Systems:

In expert or knowledge-based systems the knowledge of experts on a subject is distilled into a decision tree of rules that can be used to solve problems in the subject area. Another approach is case-based reasoning systems in which a library of previous cases is stored without trying to distill the cases into a decision tree of rules.

Using a case-based reasoning system, the decision maker with a new problem:

Case Based Reasoning

Other types of quasi-intelligent software: We have already discussed artificial neural networks. Another type of software that can solve difficult problems is a genetic algorithm. This approach simulates the biological DNA process to find a solution through mutations. Possible solutions are divided into a large number of elements that are randomly combined and tested. Successful solutions are 'genetically' combined to produce new solutions and the process continues. Where natural selection can take millions of years, the simulated selection takes place quickly. This approach can provide good solutions to NP problems such as scheduling. Go to Genetic Java link below for a demo applet of a genetic algorithm.

Genetic Algorithm

Intelligent Agents

Software that a user sends to a remote server to accomplish a task is called an agent. The adjectives associated with intelligent agents are mobile, autonomous, intelligent, and represents interests.

Uses of intelligent agents:

Intelligent agents will grow because they are easier to program than trying to accomplish the task from a central site and they impose less load on the network. As the number of agents released into the Internet grows, a major congestion problem will occur. Will server charge users for memory space and server time to regulate congestion, an economic approach, or will they use a bureaucratic approach such as a queue. There is a major security problem in agents because an important class of agents is computer viruses.

Intelligent Agents

Objective: In order to forecast the future impact of computers on society, the first step is to consider the impact computers have already had on society. We will consider the impact of computers on mathematics and the sciences, engineering and policy, business and institutions, and other human activities.

While researchers have a fairly clear idea how a computer functions, they do not know how the brain works. Until researchers have a much better idea how life forms think, the issue of whether a computer can ever be programmed to think like a human is an open question. There is a prize, the Loebner Prize, for the first software program that passes the Turing test for intelligence. Von Neumann computers are much better at arithmetic operations than humans, but much worse at pattern recognition. Neural networks, a new type of computer with numerous interconnections between the processing units much like the human brain, show great promise in pattern recognition. Future computers may combine neural networks with digital computers to take advantage of the strengths of both. Currently, a computer, Deep Blue, was programmed to beat the world champion in chess. To what extent software running in computers can demonstrate creativity is an open question. Nevertheless, in spite of their limitations software and hardware have had a major social impact and will have an even greater one in the future.

Can computers think?

An example of the controversial impact of computers on math is the 4 color map problem. This problem deals with how many colors are necessary for a map on which no two adjacent areas have the same color. Example: How many colors would it take to color a map of the US? This problem has been worked on for at least one hundred years. The conjecture was four, however, no one was able to offer a proof until some mathematicians at the University of Illinois programmed their parallel processor. After using 1000 hours of computer time to examine all possible cases, they were able to state four colors were enough. This computer approach to proofs represents a fundamentally new approach to math. The computer methodology fundamentally challenges the ideal that the goal in proving theorems is simple proofs which can be checked by other mathematicians. Examining the proof for the 4 color map problem requires understanding the software which is anything but simple. In addition, mathematicians are beginning to study mathematics problems by graphical displays in a computer. This has created a controversial area of math known as experimental mathematics, that is studying math through computer experiments instead of proofs.

There are many phenomena in science which simply can not be studied without supercomputers. For example, to analyze models of the weather requires computing tens of thousands of equations. Until the invention of supercomputers, these equations could not be computed in a reasonable time frame. Numerous physics problems exist which push the limits of computing. Chemists have programs which simulate chemical reactions and thus enable the chemist to tell the outcome without experiment. Complex molecules are studied with computer graphics. Economist have developed several types of world models with up to 15,000 equations; however these models can be simulated on a workstation.

The important concept which a computer enables an engineer or a policy maker to perform is the computation of an outcome from a simulation model. This allows an engineer or policy maker to analyze different cases without having to build a prototype or to perform experiments. For example, in the design of a car or aircraft wing, the air resistance can be determined by a computer simulation program without having to perform a wind tunnel experiment. Similarly, an economist can forecast the consequences of alternative monetary policies. Computer simulations are both much faster and much cheaper than conducting actual experiments. The value of simulation programs depends on how well the theory upon which the model is built explains the underlying phenomenon. Simulation models based on theories from natural science are generally much more accurate than simulation models based on social science theories. Some engineering projects would not have been possible without computers. The Apollo project, which sent man to the moon, could not be completed without computers. Social science simulations are generally not very actuate.

One of the most important uses of computers is administration in business and other institutions. When I worked for Douglas aircraft in the 60s, over 50 percent of the computer use was for administrative tasks, such as accounting and records for government contracts. Currently most corporate and other institutional records have been computerized and are maintained in databases. Even here at UT, student registration is finally being computerized. Businesses and other institutions are increasing their use of computers to make analytical decisions. One example is the growing use of spreadsheets to consider alternatives. Thus, spreadsheets can be considered the business counterpart to engineering simulation programs. As computers become increasingly powerful, the amount of detail considered in business decision-making increases.

Computers are now being used in most human activities. For example, in police work computers have been programmed to match fingerprints, and this application identified a serial killer in California. Before this program, the labor costs were simply too high to match fingerprints unless there were suspects. A hot area of computation in the arts is music synthesizers and digital movies. Another are programs to choreograph dance steps. In sports, computers are used to analyze athletes' performances. In medicine, a computer program has been developed which stimulate nerves to allow paraplegics to move their muscles. One success story is a young lady paralyzed from waist down who was programmed to ride a bicycle and walk.

![]()

Next:CommunicationUp:Info:Info

Society Previous:Microelectronics

![]()

norman@eco.utexas.edu Last revised: 1 Jan 06